FC-Model

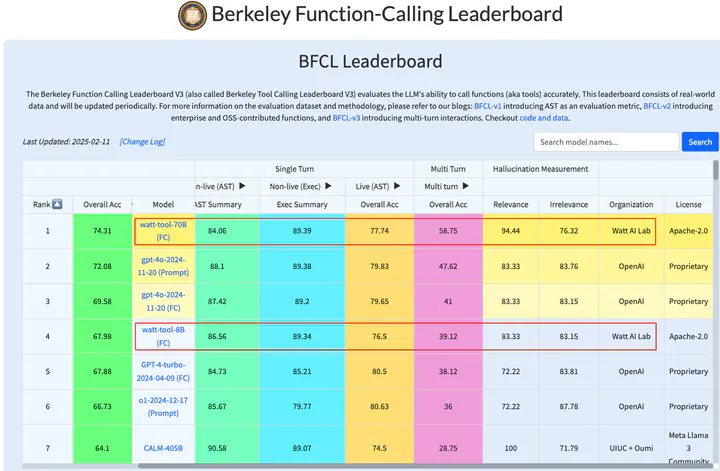

We opensourced a 70B (and a 8B) model for function calling and tool usage. The model is based on LLaMa-3.3-70B-Instruct, optimized for tool usage and multi-turn dialogue. It achieves state-of-the-art performance on the Berkeley Function-Calling Leaderboard (BFCL).

Model Description

This model is specifically designed to excel at complex tool usage scenarios that require multi-turn interactions, making it ideal for empowering platforms like Lupan, an AI-powered workflow building tool. By leveraging a carefully curated and optimized dataset, watt-tool-70B demonstrates superior capabilities in understanding user requests, selecting appropriate tools, and effectively utilizing them across multiple turns of conversation.

Target Application: AI Workflow Building as in https://lupan.watt.chat/ and Coze.

Key Features

- Enhanced Tool Usage: Fine-tuned for precise and efficient tool selection and execution.

- Multi-Turn Dialogue: Optimized for maintaining context and effectively utilizing tools across multiple turns of conversation, enabling more complex task completion.

- State-of-the-Art Performance: Achieves top performance on the BFCL, demonstrating its capabilities in function calling and tool usage.

- Based on LLaMa-3.1-70B-Instruct: Inherits the strong language understanding and generation capabilities of the base model.

Training Methodology

watt-tool-70B is trained using supervised fine-tuning on a specialized dataset designed for tool usage and multi-turn dialogue. We use CoT techniques to synthesize high-quality multi-turn dialogue data.

The training process is inspired by the principles outlined in the paper: “Direct Multi-Turn Preference Optimization for Language Agents”. We use SFT and DMPO to further enhance the model’s performance in multi-turn agent tasks.